Schools, offices, and some homes are equipped with computer networks, which are wires that connect computers together and software and special hardware that allows the computers to communicate with each other. This allows people to send information to each other through their computers. But how does this really work?

Computers use electricity to perform calculations on binary numbers. Arbitrary voltages represent 0 and 1, and those voltages are sent along a wire no matter how long it is and still be numbers at the receiving end. As long as two computers are connected, this works well, but if two wires are needed to connect any two computers, then six wires are needed to fully connect three computers to each other and twelve to connect four computers. A room with thirty networked computers would be full of wires (870 to each computer)!

Hawaii has an unusual problem when it comes to computer network communication. It is a collection of islands. Linking them by cables is an expensive proposition. In the early 1970s, the technicians at the University of Hawaii decided to link the computers using radio. Radio transmission is similar to wire transmission in many practical ways, and allocating 35 radio frequencies to connect one computer on each island to all of the others would have been possible, but their idea was better. They used a single radio link for all computers. When a computer wanted to send information along the network, it would listen to see if another computer was already doing so. If so, it would wait. If not, it would begin to send data to all of the other computers and would include in the transmission a code for which computer was supposed to receive it. All could hear it, but all would know which computer was the correct destination so the others would ignore it. This system was called Alohanet.

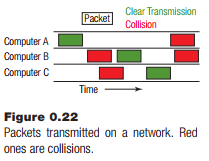

There is a problem with this scheme. Two or more computers could try to send at almost the same time, having noted that no other computer was sending when they checked. This is called a collision, and is relatively easy to detect; the data received is nonsense. When that happens, each computer waits for a random time, checks again, and tries again to send the data. An analogy would be a meeting where many people are trying speak at once.

Obviously, the busier the network is, the more likely a collision will be, and the re-transmissions will make things worse. Still, this scheme works very well and is functioning today in the form of the most common networking system in earth – Ethernet.

Ethernet is essentially Alohanet along a wire. Each computer has one connection to it, rather than connections to each of the possible destinations, and collisions are possible. There is another consideration that makes this scheme work better, and that it is use of packets. Information along these networks is sent in fixed-size packages of a few thousand bytes. In this way, the time needed to send a packet should be more or less constant, and it’s more efficient than sending a bit or a byte at a time.

Each packet contains a set of data bytes intended for another computer, so within that packet should be some information about the destination, the sender, and other important data. For instance, if a data file is bigger than a packet, then it is split up into parts to be sent. Thus, a part of the packet is a sequence number indicating which packet it is (e.g., number 3 of 5). If a particular packet never gets received, then the missing one is known, and the receiver can ask the sender for that packet to be resent. There are also codes to determine whether an error has occurred.

1. Internet

The Internet is a computer network designed to communicate reliably over long distances. It was originally created to be a reliable communications system that could survive a nuclear attack, and was funded by the military. It is distributed, in that data can be sent from one computer to another in a chain until it reaches its destination.

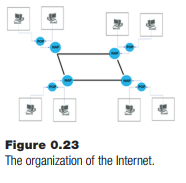

Imagine a collection of a few dozen computers, and that each one is connected to multiple others, but not directly to all others. Computer A wishes to send a message to computer B, and does so using a packet that includes the destination. Computer A sends the message to all computers that it is connected to. Each of those computers sends it to all of the computers that they are connected to, and so on until the destination is reached. All of the computers will receive every message, which is inefficient, but so long as there exists some path from A to B, the message will be delivered.

It would be hard to tell when to stop sending a message in this scheme. Another way to do it is to have a table in each computer saying which computers in the network are connected to which others. A message can be sent to a computer known to be a short path to the destination, one computer at a time, and in this case not all computers see the message, only the ones along the route do. A new computer added to the network must send a special message to all of the others telling them which of the existing computers it is directly connected to, and this message will propagate to all machines, allowing them to update their map. This is essentially the scheme used today.

The Internet has a hierarchy of communication links and processors. First, all computers on the Internet have a unique IP (Internet Protocol) address through which they are reached. Because there are many computers in the world, an IP address is a large number. An example is 172.16.254.1 (obtained from Wikipedia). When a computer in, say, Portland want to send a message to, for example, London, the Portland computer composes a packet that contains the message, its address, and the recipient’s address in London. This message is sent along the connection to its Internet service provider, which is a local computer, at a relatively low speed, perhaps 10 megabits per second. The service provider operates a collection of computers designed to handle network traffic. This is called a Point of Presence (POP), and it collects messages from a local area and concentrates them for transmission further down the line.

Multiple POP sites connect to a Network Access Point (NAP) using much faster connections than users have to connect with the POP. The NAP concentrates even more users, and provides a layer of addressing that can be used to send the data to the destination. The NAP for the Portland user delivers the message to a relatively local NAP, which sends it to the next NAP along a path to the destination in London using an exceptionally fast (high bandwidth) data connection. The London NAP sends the message to the appropriate local POP, which in turn sends it to the correct user.

An important consideration is that the message can be read by any POP nor NAP server along the route. Data sent along the Internet is public unless it is properly encrypted by the users.

2. World Wide Web

The World Wide Web, or simply the Web, is a layer of software above the Internet protocols. It is a way to access files and data remotely through a visual interface provided by a program that runs on the user’s computer, a browser. When someone accesses a Web page, a file that describes that page is downloaded to the user’s browser and displayed. That file is text in a particular format, and the file name usually ends in .html or .htm. The file holds a description of how to display the page: what text to display, where images can be found that are part of the page, how the page is formatted, and where other connected pages (links) are found on the Internet. Once the file is downloaded, the local (receiving) computer performs the work concerned with the display of the file, such as playing sounds and videos, and drawing graphics and text.

The Web is the basis for most of the modern advances in social networking and public data access. The Internet provides the underlying network communications facility, while the Web uses that to fetch and display information requested by the user in a visual and auditory fashion. Podcasts, blogs, and wikis are simple extensions of the basic functionality.

The Web demands the ability for a user in Portland to request a file from a user in London and to have that file delivered and made into a graphical display, all with a single click of a mouse button. Web pages are files that reside on a computer that has an IP address, but the IP address is often hidden by a symbolic name called the Universal Resource Locator (URL). Almost everyone has seen one of these (http://www.facebook.com is one example). Web pages have a unique path or address based on a URL. Anyone can create a new web page that uses its very own unambiguous URL at any time, and most of the world would be able to view it.

The Web is an example of what programmers call a client-server system. The client is where the person requesting the Web page lives, and is making a request. The server is where the Web page itself exists, and it satisfies the request. Other examples of such systems would be online computer games, Email, Skype, and Second Life.

Source: Parker James R. (2021), Python: An Introduction to Programming, Mercury Learning and Information; Second edition.